Trace with LangChain (Python and JS/TS)

LangSmith integrates seamlessly with LangChain (Python and JS), the popular open-source framework for building LLM applications.

Installation

Install the core library and the OpenAI integration for Python and JS (we use the OpenAI integration for the code snippets below).

For a full list of packages available, see the LangChain Python docs and LangChain JS docs.

- pip

- yarn

- npm

- pnpm

pip install langchain_openai langchain_core

yarn add @langchain/openai @langchain/core

npm install @langchain/openai @langchain/core

pnpm add @langchain/openai @langchain/core

Quick start

1. Configure your environment

export LANGCHAIN_TRACING_V2=true

export LANGCHAIN_API_KEY=<your-api-key>

# The below examples use the OpenAI API, so you will need

export OPENAI_API_KEY=<your-openai-api-key>

2. Log a trace

No extra code is needed to log a trace to LangSmith. Just run your LangChain code as you normally would.

- Python

- TypeScript

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant. Please respond to the user's request only based on the given context."),

("user", "Question: {question}\nContext: {context}")

])

model = ChatOpenAI(model="gpt-3.5-turbo")

output_parser = StrOutputParser()

chain = prompt | model | output_parser

question = "Can you summarize this morning's meetings?"

context = "During this morning's meeting, we solved all world conflict."

chain.invoke({"question": question, "context": context})

import { ChatOpenAI } from "@langchain/openai";

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { StringOutputParser } from "@langchain/core/output_parsers";

const prompt = ChatPromptTemplate.fromMessages([

["system", "You are a helpful assistant. Please respond to the user's request only based on the given context."],

["user", "Question: {question}\nContext: {context}"],

]);

const model = new ChatOpenAI({ modelName: "gpt-3.5-turbo" });

const outputParser = new StringOutputParser();

const chain = prompt.pipe(model).pipe(outputParser);

const question = "Can you summarize this morning's meetings?"

const context = "During this morning's meeting, we solved all world conflict."

await chain.invoke({ question: question, context: context });

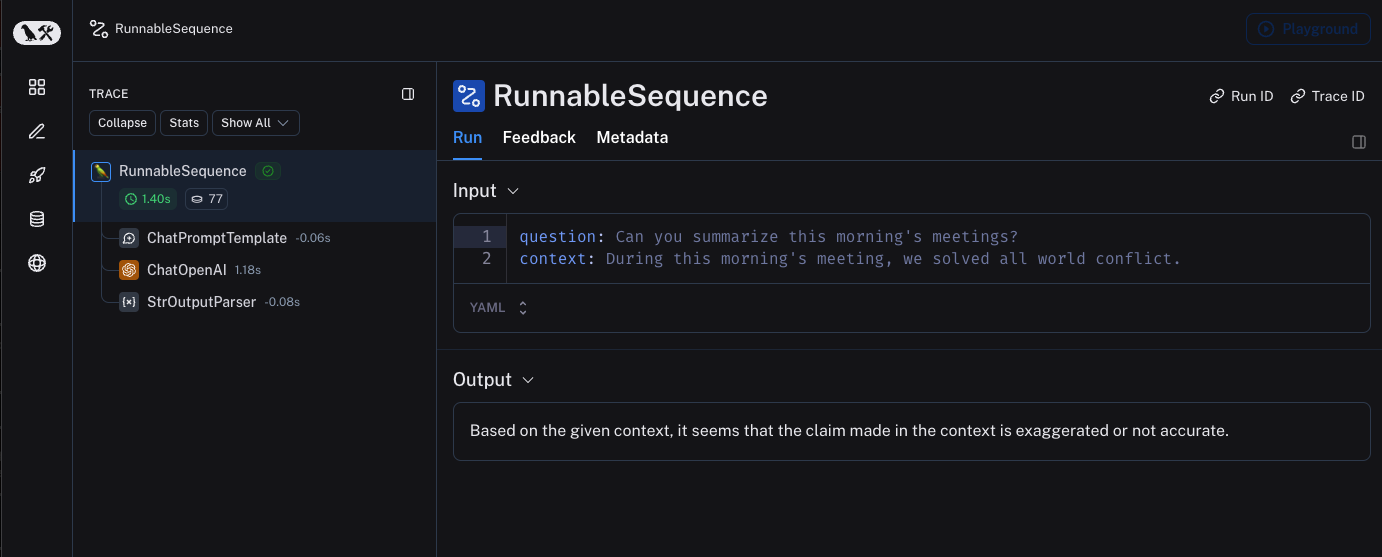

3. View your trace

By default, the trace will be logged to the project with the name default. An example of a trace logged using the above code is made public and can be viewed here.

Log to a specific project

Statically

As mentioned here LangSmith uses the concept of a Project to group traces. If left unspecified, the tracer project is set to default. You can set the LANGCHAIN_PROJECT environment variable to configure a custom project name for an entire application run. This should be done before executing your application.

export LANGCHAIN_PROJECT=my-project

Dynamically

- Python

- TypeScript

# You can set the project name for a specific tracer instance:

from langchain.callbacks.tracers import LangChainTracer

tracer = LangChainTracer(project_name="My Project")

chain.invoke({"query": "How many people live in canada as of 2023?"}, config={"callbacks": [tracer]})

# LangChain python also supports a context manager for tracing a specific block of code.

# You can set the project name using the project_name parameter.

from langchain_core.tracers.context import tracing_v2_enabled

with tracing_v2_enabled(project_name="My Project"):

chain.invoke({"query": "How many people live in canada as of 2023?"})

// You can set the project name for a specific tracer instance:

import { LangChainTracer } from "langchain/callbacks";

const tracer = new LangChainTracer({ projectName: "My Project" });

await chain.invoke(

{

query: "How many people live in canada as of 2023?"

},

{ callbacks: [tracer] }

);

Add metadata and tags to traces

LangSmith supports sending arbitrary metadata and tags along with traces. This is useful for associating additional information with a trace, such as the environment in which it was executed, or the user who initiated it. For information on how to query traces and runs by metadata and tags, see this guide

- Python

- TypeScript

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful AI."),

("user", "{input}")

])

chat_model = ChatOpenAI()

output_parser = StrOutputParser()

# Tags and metadata can be configured with RunnableConfig

chain = (prompt | chat_model | output_parser).with_config({"tags": ["top-level-tag"], "metadata": {"top-level-key": "top-level-value"}})

# Tags and metadata can also be passed at runtime

chain.invoke({"input": "What is the meaning of life?"}, {"tags": ["shared-tags"], "metadata": {"shared-key": "shared-value"}})

import { ChatOpenAI } from "@langchain/openai";

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { StringOutputParser } from "@langchain/core/output_parsers";

const prompt = ChatPromptTemplate.fromMessages([

["system", "You are a helpful AI."],

["user", "{input}"]

])

const model = new ChatOpenAI({ modelName: "gpt-3.5-turbo" });

const outputParser = new StringOutputParser();

// Tags and metadata can be configured with RunnableConfig

const chain = (prompt.pipe(model).pipe(outputParser)).withConfig({"tags": ["top-level-tag"], "metadata": {"top-level-key": "top-level-value"}});

// Tags and metadata can also be passed at runtime

await chain.invoke({input: "What is the meaning of life?"}, {tags: ["shared-tags"], metadata: {"shared-key": "shared-value"}})

Customize run name

When you create a run, you can specify a name for the run. This name is used to identify the run in LangSmith and can be used to filter and group runs. The name is also used as the title of the run in the LangSmith UI.

- Python

- TypeScript

# When tracing within LangChain, run names default to the class name of the traced object (e.g., 'ChatOpenAI').

# (Note: this is not currently supported directly on LLM objects.)

...

configured_chain = chain.with_config({"run_name": "MyCustomChain"})

configured_chain.invoke({"query": "What is the meaning of life?"})

// When tracing within LangChain, run names default to the class name of the traced object (e.g., 'ChatOpenAI').

// (Note: this is not currently supported directly on LLM objects.)

...

const configuredChain = chain.withConfig({ runName: "MyCustomChain" });

await configuredChain.invoke({query: "What is the meaning of life?"});